MCPG-ME

Monte Carlo Policy Gradient MAP-Elites: A synergy between Deep Reinforcement Learning and Quality Diversity Algorithms.

Summary

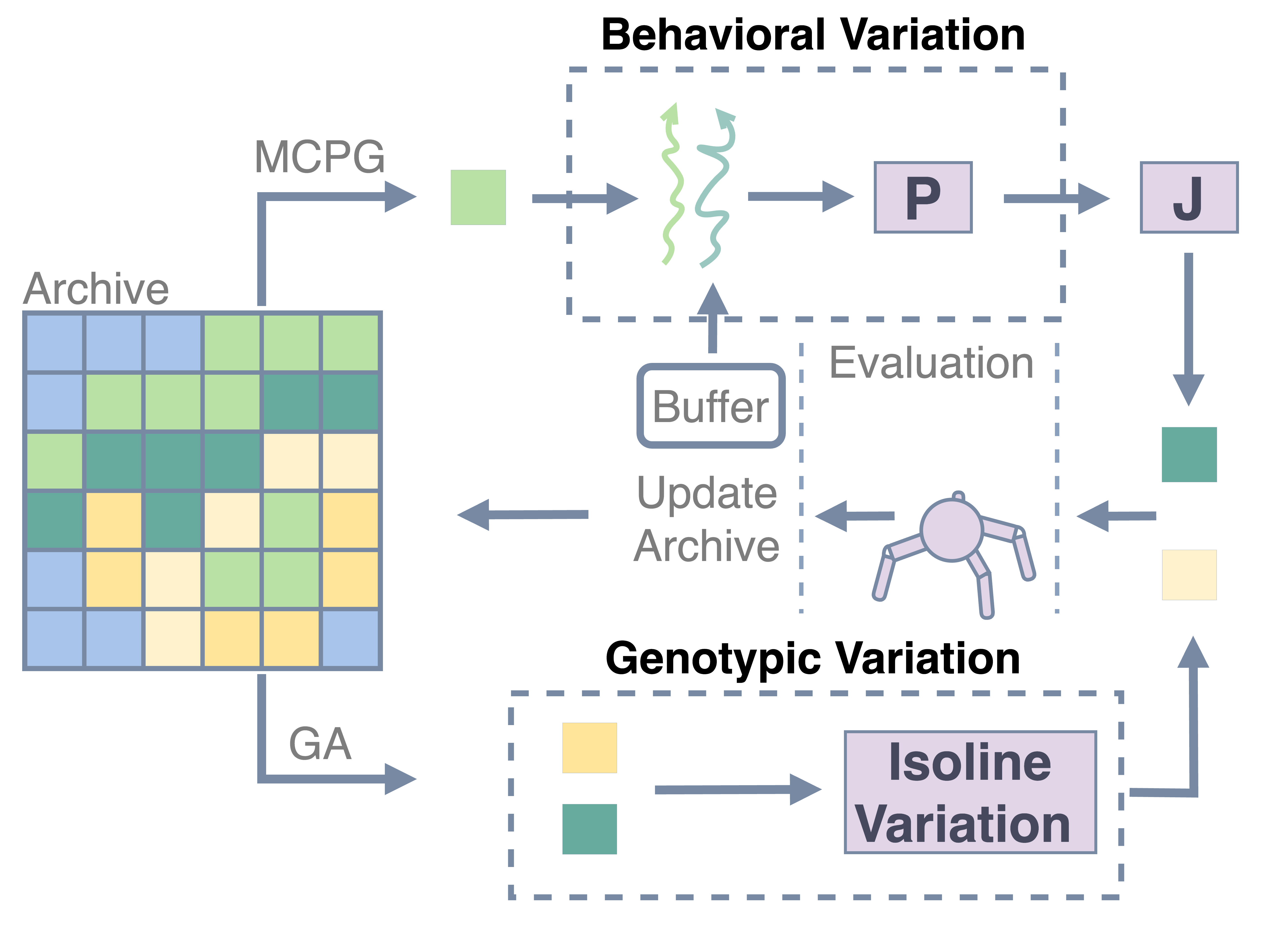

Quality-Diversity (QD) optimization, a family of evolutionary algorithms designed to produce a diverse set of high-performing solutions for a specific problem. MAP-Elites (missing reference) is a simple, yet effective QD optimization algorithm that has shown success to a variety of fields. It has been used to teach robots how to adapt to damage (missing reference), generate aerodynamic designs (missing reference) or to create content for games (missing reference). MAP-Elites, however, is driven by Genetic Algorithms and often struggles to evolve neural networks with numerous parameters and is inefficient in navigating the search space of the optimization problem. Additionally, actor-critic based QD algorithms, like PGA-MAP-Elites (missing reference) and DCRL (missing reference) , while capable of handling more complex models efficiently, suffer from slow execution times and are heavily dependent on the effectiveness of the actor-critic training, which compromises scalability. Addressing these challenges, this work introduces the Monte Carlo Policy Gradient MAP-Elites (MCPG-ME) algorithm, which utilizes a Monte Carlo Policy Gradient (MCPG) based variation operator to apply quality-guided mutations to the solutions. This novel variation operator allows MCPG-ME to independently optimize the solutions without relying on any actor-critic training, enhancing scalability, runtime efficiency while maintaining competitive sample efficiency.

Results

Evaluations across various continuous control locomotion tasks demonstrate that MCPG-ME:

-

Fast: Achieves high execution speeds, operating significantly faster than the actor-critic based QD algorithms, in some cases running up to nine times faster.

-

Sample Efficient: Surpasses the performance of the state-of-the-art algorithm, DCRL, in some of the tasks and consistently outperform all the other baselines in most of the tasks.

-

Scalable: Demonstrates promising scalability capabilities when subjected to massive parallelization.

-

Novel Solutions: Excels in finding a diverse set of solutions, achieving equal or higher diversity score (coverage) than all competitors in all tasks.

In these tasks, solutions refer to the robot’s walking behavior. The quality of solutions refers to the effectiveness with which the robot completes a given task, and the diversity of solutions denotes the range of different walking styles the robot can successfully employ. See below some interesting solutions:

A hopping Walker

Ant going around the trap

A fast Walker

For technical details of the method and the official results, please stay tuned. The paper is coming soon!

Author

- Konstantinos Mitsides (konstantinos.mistides23@imperial.ac.uk)